Tuesday, April 18, 2006

RepRap application

Adrian and I made some inroads towards merging bits of code.

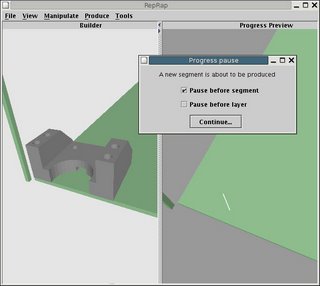

Adrian and I made some inroads towards merging bits of code.This is the current RepRap application, showing both the construction view and the production progress window. The progress area optionally allows you pause the process so you can inspect what is being printed. Throughout this time, both panels can still be fully manipulated and inspected.

What's especially fun about this is that it is all talking to the hardware now too, so you can watch the motors whirling away, changing direction, etc. as the model builds up. If only I had a frame to put it all on :)

Without the hardware installed, you can select the null device in the preferences screen and still emulate the process.

There's still plenty to do of course. As you can see, what is being produced on that screen doesn't actually match the scene on the left, and that's some of the magic that Adrian is part way through.

Comments:

<< Home

Is the source code available somewhere? I'd like to take a look, maybe there's some way I could contribute after all (I've been writing code for 26+ years and am pretty ok with java and comm issues)

I'm also experienced with the microsoft programming platforms (C++ and VB )

I'm also experienced with the microsoft programming platforms (C++ and VB )

Yes - we mirror the CVS tree on the Wiki. Bear in mind that it's very much under development so the extensive documentation may miss the odd full stop...

It's at:

http://reprapdoc.voodoo.co.nz/cvs/reprap/

It's at:

http://reprapdoc.voodoo.co.nz/cvs/reprap/

I was wondering, will the final package contain adjuster controls for people whose first repstrap machine deviates from the design slightly? Say if they're unable to make the nozzle the exact same diameter but a similar one, or salvaged motors run at a different speed, etc?

Yes - we're trying to accomodate as much of that sort of thing as possible. To take your example, we seem to be converging on 0.5mm diameter nozzles. But the software that drives the machine has the width of the deposited polymer track (which is obviously dependent on the nozzle diameter) as a parameter that's set in a machine configuration file. All the high-level software works in mm (there'll be a button to convert inch STL files to mm, too), and there are factors that translate mm into machine steps. Again these are in a configuration file. As speeds are in mm/s speeds get changed automatically.

It is possible to write timing-sensitive Java code, but it requires a bit of care. Unloading work to binary drivers is not necessary however, as we can make the comms sufficiently robust.

The trick is that we have to use synchronisation for the timing-sensitive parts anyway. This is handled inside the RepRap. Our Java code just needs to send atomic operations to the devices in the field.

As an aside, I think binary drivers would scare the bejesus out of too many potential developers :)

Vik :v)

The trick is that we have to use synchronisation for the timing-sensitive parts anyway. This is handled inside the RepRap. Our Java code just needs to send atomic operations to the devices in the field.

As an aside, I think binary drivers would scare the bejesus out of too many potential developers :)

Vik :v)

I'm going to have to go with Vik on this one. I must respectfully disagree that java was designed just for scripting. A properly designed and compiled java program should be plenty robust to handle the timing cycles. Java was originally designed for use in microwaves, television sets and refrigerators (I think it was called Acorn back then) and it was the sudden explosion of the web which got it ported to the browser environment which has given it a questionable reputation. Javascript and java appplets are completely different from java programs. The Java VM is actually quite slick and capable so long as its behaviour is tested and standardized, something which will be necessary with any new variations of reprap anyways. I think you're going to choke on details if you try to constrain this system to a machine coded driver set. What happens when someone comes along with a different machine? Someone will have to rewrite the machine code drivers every single time a different type of machine is used. Whereas a simple testing feedback loop through the java VM can establish operational parameters which then allow the correct settings. Once standardized (so long as you aren't also editing a text or doing something else with the CPU) the behaviour of the VM would be constant.

Machine coded drivers don't scare me, they're just irritating like poison oak.

Post a Comment

Machine coded drivers don't scare me, they're just irritating like poison oak.

<< Home